AI Scams: A Guide to Defending Yourself

- Anna Galaktionov

- Oct 31, 2024

- 3 min read

By Almendra Carrion

A significant innovation is artificial intelligence, a tool that offers remarkable efficiencies, such as the ability to generate designs from simple keywords and much more depending on your imagination. This capability led to playful applications, such as creating voice-overs for videos, generating unique characters for games, or even making prank calls with synthesized voices. However, alongside these advantages comes a set of concerns that cannot be ignored. Many people harbor fears regarding the potential misuse of AI, particularly in the realm of frauds.

AI-driven scams represent a particularly dangerous threat. Criminals can impersonate the voices of loved ones, making it difficult for victims to discern whether they are communicating with a trusted friend, or a total stranger. This deception leads individuals to provide money or personal information under false pretenses. The emotional manipulation involved in these scams adds another layer of complexity, as victims may feel a sense of urgency or obligation to assist what they believe to be a loved one in need. In the digital age, scams have evolved from generic manipulation to highly targeted deception. Previously, scammers relied on emotional tactics like catfishing and fake personas to build trust before asking for money. Now, thanks to AI’s ability to analyze personal data, they can bypass the relationship-building phase and directly demand money with alarming efficiency.

These scams manifest in various forms, each presenting unique challenges and dangers. The potential for significant financial loss, along with the emotional toll of being deceived, poses serious threats to personal safety and the well-being of families. Therefore, maintaining vigilance is essential for Barry students. Staying informed about the tactics employed by scammers helps students better prepare for and respond to these threats.

Here is a breakdown of diverse types of scams that utilize advanced technology like AI:

Voice Cloning Scams

• Scammers use AI to replicate a person’s voice, often mimicking someone the victim knows.

• This impersonation can lead to requests for money or sensitive information under the guise of a trusted individual.

Deepfake Video Scams

• Scammers create realistic videos using deepfake technology to impersonate someone else, such as a celebrity or public figure.

• These videos can spread misinformation or solicit money by presenting fake endorsements or appeals.

Deepfake Video Call Scams

• Utilizing deepfake technology in real-time video calls, scammers can impersonate someone the victim knows.

• This tactic increases the emotional manipulation involved, making victims more likely to comply with requests.

AI Images and Deepfake Scams

• Scammers generate fake images using AI to create misleading visuals, such as fake identification or fraudulent promotional materials.

• These images can be used in various scams, including fake charities or phishing schemes.

AI-Generated Websites

• Scammers create convincing websites that mimic legitimate businesses or services using AI-generated content.

• Victims may unknowingly provide personal information or make purchases from these fraudulent sites.

AI-Enhanced Phishing Emails

• With AI, scammers can create highly tailored and persuasive phishing emails that seem to originate from reliable sources.

• These emails often trick individuals into clicking malicious links or providing sensitive information.

AI-Generated Listings

• Scammers post fake listings for products or services using AI-generated content and images.

• Victims may pay for items that do not exist or are misrepresented, leading to monetary loss.

Particular attention should be given to protecting vulnerable members of society, such as parents and grandparents, who may be less familiar with the rapidly evolving digital landscape. Education and awareness are critical components of defense against deceptive practices. By fostering open conversations about the risks associated with technology and sharing knowledge about how to recognize frauds, communities can create a safer environment for everyone. Taking proactive measures and encouraging skepticism in suspicious situations can significantly reduce these malicious scenarios.

To protect yourself, it is essential to recognize the signs of deepfakes and voice cloning. With voice AI, listen for odd inflections—these systems often lack the natural nuance of human speech, which can give them away. For deepfake videos, watch for discrepancies in facial features: look for overly smooth or unusually wrinkled skin, unnatural expressions, and stilted emotional displays.

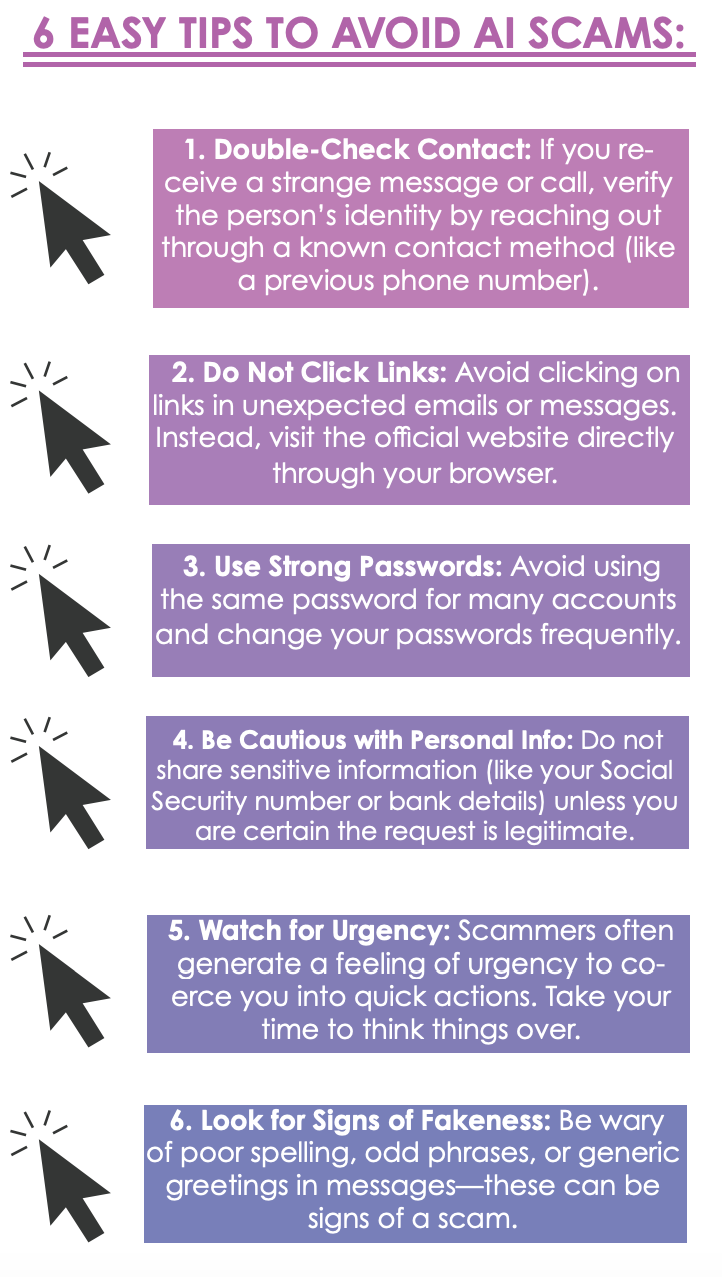

Here are some additional tips to safeguard yourself:

• Establish a safe word with loved ones to verify identity during communications.

• Avoid sending money via bitcoin or gift cards, as scammers often prefer these untraceable methods.

• Consider using deepfakedetection software for an extra layer of security. If you encounter a scam, report it online at reportfraud.ftc.gov. It is a straightforward process— just click “report now” and follow the simple steps provided. Stay vigilant and protect yourself!

Comments